ETL - what is it?

- ETL – you need to know this:

- What is ETL?

- How the ETL Process Works – Three Stages

- When ETL, and when streaming?

- ETL Tools – Overview of the Ecosystem and Selection Criteria

- PIMCORE Datahub and ETL – imports/exports in practice

- Summary

- FAQ – Frequently Asked Questions

ETL is a mature, still fundamental method of information integration between systems – from data warehouses, through business applications, to martech platforms. From this article, you will learn what ETL is, when it is worth using it (and when it is better to opt for alternatives), what the ETL process looks like step by step, which tools to use, and how to turn these principles into practice in the Datahub module of the PIMCORE platform.

ETL – you need to know this:

- ETL (Extract, Transform, Load) is the process of extracting data from various sources, transforming it, and loading it into a target system, with the aim of creating a consistent, high-quality data set for analysis.

- The choice between ETL, ELT, and streaming depends on the project's needs: ETL is effective for complex transformations before loading, ELT — when computations are performed by the target system (e.g., in the cloud), and streaming — when real-time processing is crucial.

- ETL tools include both open-source solutions (Pentaho, Talend) and cloud services (AWS Glue, Azure Data Factory). The choice depends on the scale of data, environment, budget, and team competencies.

- Good practices include versioning and testing processes, separating data zones (raw, processed), ensuring idempotency, and implementing monitoring and security measures.

What is ETL?

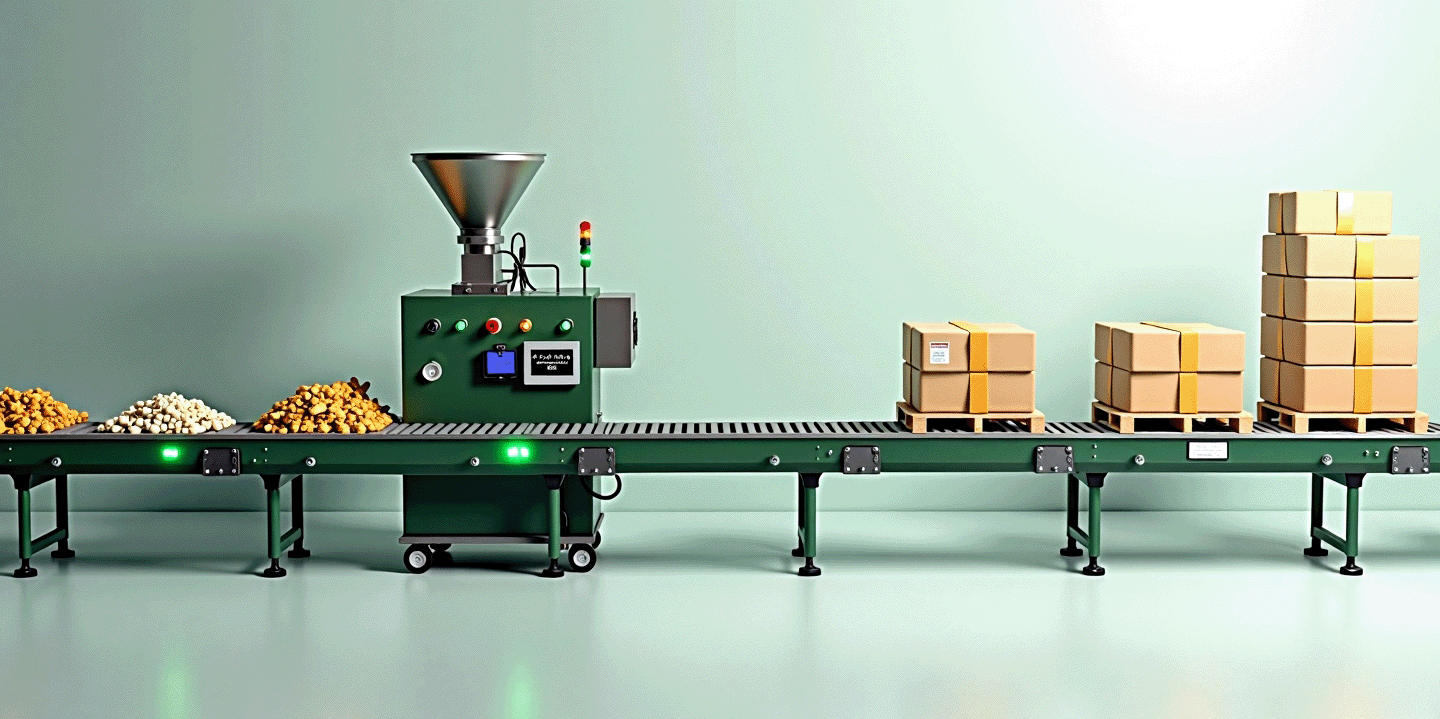

ETL abbreviation means Extract – Transform – Load, which are respectively: extraction, transformation and loading data. ETL is the process of extracting data from one or more sources (Extract), cleaning, transforming, and adapting it to meet the target requirements (Transform), and then loading it into the target system (Load). The goal is a consistent, high-quality set of information, ready for analytics, reporting, or powering other applications. In industry literature, ETL is described as a classic method of data integration, used among others for building data warehouses or powering data lakes and operational applications.

A common question is – what is ETL and how does it differ from newer approaches? ETL transforms data before loading it into the target, while ELT does this after loading (e.g., in the cloud or in a DWH engine). The choice depends on the architecture and project requirements.

How the ETL Process Works – Three Stages

Extract – extraction

We acquire data from files (CSV, Excel, XML/JSON), relational/NoSQL databases, APIs, event queues, or SaaS applications. Source filtering, handling increments (e.g., Change Data Capture), and quality control are important.

Transform – transformation

We clean and standardize formats (dates, currencies, encodings), remove duplicates, map dictionaries, join tables (join/lookup), calculate derived fields, enrich records (e.g., geocoding).

Load – loading

Record to data warehouse, data lake, operational database, PIM/DAM/CRM application – full or incremental. Good practices include transactions, post-load validations, and retry paths.

Such an ETL process is the core of most integration pipelines - regardless of the technology or cloud provider.

[Sources] → Extract → [Raw zone] → Transform → [Processed Zone] → Load → [System PIM/DWH/Datalake]

When ETL, and when streaming?

Choosing the right method of data flow is a strategic decision that depends on many factors, including the complexity of transformations, where they are performed, as well as the criticality and latency that your business can accept. The answer to this question often boils down to balancing the need for full control with the necessity for quick action. Check what and when you should choose:

- Choose ETL, when transformations are complex and you want to perform them outside the target system (e.g., before the data reaches DWH/SaaS), you have clearly defined batches and need strict control over data flow and quality.

- Choose ELT, when "computing" power and transformation tools are available in the target system (e.g., modern DWH/Lakehouse). First, you load the data, then you transform it close to the storage.

- Choose streaming / near-real-time, when low latency matters – e.g., product listings, alerts, IoT, real-time recommendations. Services such as Google Cloud Dataflow (based on Apache Beam) or native mechanisms in clouds can handle both batches and streams.

ETL Tools – Overview of the Ecosystem and Selection Criteria

On the market, you will find both "boxed" solutions and open-source or fully managed cloud services. In practice, ETL tools are selected based on the context, i.e., the environment (on-prem vs. cloud), data scale, team competencies, budget, and operational requirements (monitoring, SLA, versioning, IaC).

Sample categories and representatives (based on official documentation):

Microsoft Platforms

SSIS – an environment for building and executing ETL packages in the SQL Server/Azure ecosystem. Good support for connectors, transformations, and orchestration.

Azure Data Factory – managed orchestration service with data flows (ETL/ELT), integration with multiple sources, and triggers and monitoring.

AWS

AWS Glue – serverless data integration service; metadata catalog, ETL tasks, orchestration, logging, and integrations with data lakes/DWH.

Google Cloud

Dataflow – a managed engine for batch and streaming pipelines (ETL) based on Apache Beam; autoscaling, templates, and integrations with GCP products.

Open-source / distributions

Apache NiFi – visual, state "dataflow" for routing, processing, and mediation of systems; good for real-time flows and queue/priority management.

Pentaho Data Integration (Kettle) – classic ETL studio with a rich set of steps and jobs; also available in the Hitachi Vantara distribution.

Talend (Qlik Talend) – an extensive family of tools for data integration and quality (community and commercial editions).

In real ETL implementations, tools are often integrated with orchestrators (e.g., cloud schedules, CI/CD tools) and observability (logs, metrics, alerts). In practice, ETL tools are divided into graphical (drag-and-drop), script-based (Python/SQL/Beam), and managed services – each path has its pros and cons (startup speed vs. flexibility and versioning).

PIMCORE Datahub and ETL – imports/exports in practice

Pimcore 11 offers a native Datahub module implementing the ETL (Extract-Transform-Load) concept without additional plugins. Datahub enables data import via GraphQL, CSV, JSON, XML, and RestAPI. In terms of export, the CE and PE versions offer a GraphQL API, while the EE version additionally supports export to CSV, JSON, XML, and RestAPI formats, allowing Pimcore to function as a headless platform.

- Extraction and export of data Datahub provides dedicated GraphQL endpoints for retrieving data from Pimcore (PIM/MDM products, CMS content, DAM files). External clients specify in queries what data they need, receiving it in JSON format. GraphQL simultaneously serves as a transformation function – the data structure is formed at the query stage, eliminating redundant information.

- Datahub import supports import via GraphQL mutations and a graphical Data Importer handling CSV, XLSX, JSON, XML files. The administrator can map source data to Pimcore objects, define transformations and update strategies without programming.

- Data distribution Besides the API, Pimcore EE allows exporting to files (CSV, XML, JSON) with automatic delivery via SFTP or HTTP POST. Exports can be scheduled cyclically or triggered by events (webhooks).

Thanks to Datahub, companies streamline the flow of information without writing code, reducing integration costs and increasing business flexibility.

Summary

ETL is a mature, fundamental method of data integration that organizes and standardizes information across the organization. Although there are newer alternatives, such as ELT and streaming, the ETL process remains crucial for ensuring high-quality data, essential for analytics and efficient business operations. Proper ETL tools and the implementation of good design practices guarantee that data integration processes will be reliable and scalable.

FAQ – Frequently Asked Questions

ETL and ELT are not the same, although both are used for data integration. The main difference lies in the order of performing transformations. In the ETL process, data is transformed before being loaded into the target system (e.g., a data warehouse). In the ELT (Extract, Load, Transform) process, data is first loaded into the target (often into the cloud or a modern data store like a data lake), and transformations occur there, utilizing the computational power of the target system.

ETL processes are commonly used to power data warehouses (DWH) for reporting and business analytics purposes. Other examples include data migration between systems (e.g., from ERP to CRM), data integration from multiple sources (e.g., sales, marketing, and financial), or powering operational applications, such as PIM/DAM, with consistent product data.

Yes, Pimcore 11, thanks to the built-in Datahub module, allows for the execution of ETL processes without writing code. This tool enables graphical mapping of source data (from files, API, GraphQL) to Pimcore objects, defining transformations, and managing imports/exports easily. It also allows for the automation and scheduling of recurring tasks.